Weaving the STRANDS of autonomous robotics

Posted on 27 November 2014

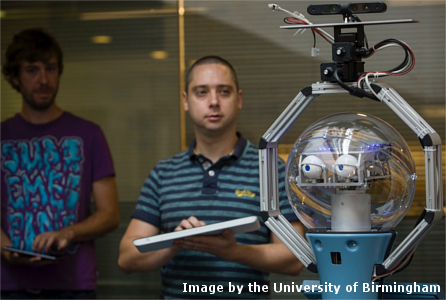

Weaving the STRANDS of autonomous robotics

By Nick Hawes, Senior Lecturer in Intelligent Robotics at the School of Computer Science, University of Birmingham.

By Nick Hawes, Senior Lecturer in Intelligent Robotics at the School of Computer Science, University of Birmingham.

This article is part of our series: a day in the software life, in which we ask researchers from all disciplines to discuss the tools that make their research possible.

There is a great deal of excitement surrounding the use of robots in a variety of industries, from security and care to logistics and manufacturing.

These are not the robots of the past, who were static automatons confined to cages and empty factory floors, but interactive robots working alongside humans in everyday environments. However, to create robots that can cope in the real world in all its dynamic, unpredictable glory we must create systems which are wholly, or at least partially, autonomous.

That is to say, they can make decisions for themselves, from how to move a single vehicle around a moving obstacle, to planning how a fleet of other robots can work alongside humans in a production line. The need for automated systems to process sensor data and make decisions at short notice or in uncertain conditions has turned modern robotics into as much a software challenge as a hardware one.

Autonomous robots are fascinating, complex software systems. When designing and implementing a robot system you must plan for a wide range of eventualities, from low level control loops measured in tens of milliseconds, to long-term task plans covering hours and days of robot activity. A similar range must also be spanned, often in parallel, in terms of representations of the world and the rooms, objects and people within it.

I currently lead STRANDS, a European project that researches how to create and deploy mobile robots which use AI techniques to meet the challenges of operating in human environments, whilst also learning on the job as their lifetimes extend from hours to weeks and months. Prior to this I spent about six years leading teams working on other large projects, such as CoSy and COGX, where I created systems which integrated various forms of AI into robotic designs.

In terms of software stack, most autonomous robots are similar. You start with low level interfaces to talk to the hardware. These are usually provided by the manufacturer or based on a known protocol, sometimes reverse engineered by port sniffing, which often limits your freedom to innovate. On top of this, most robotics engineers work with some kind of component-based middleware to encourage language/platform interoperability, code reuse and sharing between projects, robots and teams. When I started out in robotics there was no standard choice of middleware, and many engineering-conscious groups, including my own, chose to build their own.

Thankfully those dark days are past, and now ROS is, for better or worse, the dominant choice in the robotics community. This development has been hugely important for the community, as it is now possible to create a highly functional robot system with very little investment, except to overcome a rather challenging learning curve.

Indeed, without the de facto standardisation provided by ROS, the field or robotics would have made much less progress in recent years. The fact that ROS is open source is also crucial to many of its users, and it encourages many to re-share their own work, as we are doing in STRANDS, which benefits the community once again.

When developing autonomous robot software systems, I always find the largest problem to be integration in its many forms. While we work with many talented scientists and engineers who are able to produce good software components, the process of integrating these components into full systems is always more challenging and time consuming than you might expect.

When plugging components together you always find that things behave differently, typically when inputs are not the sanitised, predictable ones you give your component when testing, and this is exacerbated when running on machines under the heavy load that only occurs when multiple components are put together for real, which is something that typically only a few team members will actually do.

However, there is also a huge social integration needed in this process. Collaborators must understand the capabilities, or lack thereof, of the components before and after theirs in an architecture, and how this affects the performance of the system overall. Integrators must also have some knowledge of the science and engineering underlying each component to ensure they are deployed correctly in a wider architecture. It is this complexity, and the breadth, rather than depth, of skills required that has always excited me and still does.

In addition to the above issues, the fact that a robot is a software system embodied in the real world also provides challenges of its own. You can never fully predict how sensors and motors will perform, and the range of inputs your system will be faced with, and over what time periods. No matter how good simulator platforms get, and the one we prefer, MORSE, is pretty good indeed, you can never capture all the dynamics and uncertainty that exist in the real world on your chosen hardware platform.

So, my wish list for future software development is a simulator that really can mimic reality as experienced by a robot, including what we term as inquisitive passers-by, and, crucially, do this fast enough for us to see how our systems perform over days of deployment in hours of real time.