The WTFs/min indicator of code quality

Posted on 2 September 2011

The WTFs/min indicator of code quality

In the OGSA-DAI blog, Adrian Mouat wrote a blog post about code quality - illustrated by a rather accurate diagram from OSNews. We've kindly been allowed to repeat the post below.

In the OGSA-DAI blog, Adrian Mouat wrote a blog post about code quality - illustrated by a rather accurate diagram from OSNews. We've kindly been allowed to repeat the post below.

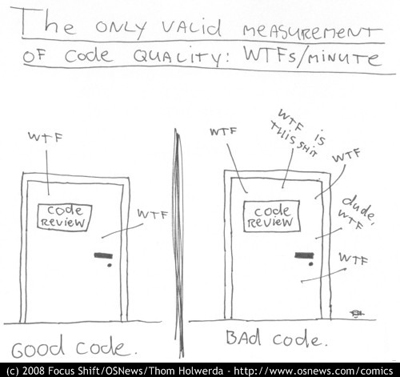

As part of my work on the OGSA-DAI Visual Workbench project, I needed to evaluate the ADMIRE codebase. This led me to investigate the issue of how to define software code quality and how to assess it. Note that I wasn’t investigating the functional qualities of the code – whether or not it meets the user’s requirements – but how well written is the code itself? If you’ve done any research into this problem, you probably quickly realised that a lot of people have a lot of opinions, but it’s hard to point to a satisfying, objective description of code quality (in contrast to functional quality, which even has standards such as ISO 9126). It seems the most common definition is that shown in the comic strip to the right.

It appears facetious at first, but perhaps hints at a deeper truth: high-quality code should not surprise us; we should be able to quickly and easily discern the structure and intent of the code. But doesn’t this then place software quality in the eyes of the beholder? What if the person evaluating the code isn’t familiar with the patterns used by the original developer? (Or – to be more cruel – what if they just aren’t competent enough to recognise the patterns used?) Are they not likely to harshly judge the code based on miscomprehension? (A similar suggestion to this measure is that software quality is inversely related to the amount of time required to make a modification; again the major problem with this is that it depends on the capability of the person making the change).

Perhaps then we should define software quality in terms of metrics – objective and automatic measures that can be applied to any code. The NDepend site offers a large and well explained list of these. Surely quality software will have a high percentage of comments and a low cyclomatic complexity? Unfortunately, it’s not as simple as that. Try running a metrics application on your source code, you’ll get back a bunch of numbers and perhaps a few warnings. Is a cyclomatic complexity of 15 bad? The answer is: it depends. Whilst these things can help identify areas where things are going wrong, they still need human intuition to decide whether there is something wrong or not. For example, a fairly typical and easy to understand function for processing command-line arguments is likely to be reasonably long and contain a high number of branches and will therefore score badly on several metrics regardless of whether or not most developers would consider it to be good code.

So much for metrics then, another tool in the box but no panacea. It seems we still need to dig further into what we mean by software quality. This can quickly lead us down philosophical rabbit holes, as it did the WikiWikiWeb community, who ran into the roadblock proposed by Robert M Pirsig in Zen and The Art of Motorcycle Maintenance and repeated by Richard P. Gabriel in Patterns of Software:

“In Zen and the Art of Motorcycle Maintenance we learn that there is a point you reach in tightening a nut where you know that to tighten just a little more might strip the thread but to leave it slightly looser would risk having the nut coming off from vibration. If you’ve worked with your hands a lot, you know what this means, but the advice itself is meaningless. There is a zen to writing, and, like ordinary zen, its simply stated truths are meaningless unless you already understand them—and often it takes years to do that.”

This quote (by analogy) suggests that only an expert, or at least an experienced developer, is likely to be able to analyse software quality. A corollary would also seem to be true: sometimes we can’t tell if our own code is high quality or not – we think we’ve done a good job but then someone with a better insight points out a way we could make 10x cleaner.

Despite all this, surely we can say something useful about how to go about analysing software quality? At the very least it seems we can identify attributes of quality software quality (as well as the opposite, attributes of bad software). This seems to be the approach that the relatively nascent Consortium for IT Software Quality (CISQ) have taken. To the best of my knowledge, they have not released a formal document on measuring software structural quality but intend to do so. However, Wikipedia (at the time of writing) has a non-cited list of their criteria, which I paraphrase here:

- Reliability. What is the likelihood and risk of failures?

- Efficiency. What is the performance and scalability of the code?

- Security. How likely are security breaches due to poor coding practices or architectural issues?

- Maintainability. How hard is it to make changes to code? Is it portable?

- Size. A larger code-base is less maintainable. As far as I can tell this should really come under the maintainability criterion, however high-quality code will tend to be concise.

Frankly however, I would prefer more bite-sized criteria that are easier to apply. Perhaps later work from CISQ will break down the above criteria into more practical chunks. In the meantime, I suggest some other criteria, based on several sources, including the Software Sustainability Institute, Stackoverflow and MSDN Magazine:

- Readability. Is the source code legible? Is it correctly formatted and idiomatic?

- Patterns. Has the author used common patterns where appropriate? Similarly, have they avoided anti-patterns?

- Testability. Are there unit tests? How much coverage? How hard would it be to add such tests?

- Data structures. Are the data structures used appropriate for the code? Are they at the correct level of abstraction?

- Algorithms. Does the code use algorithms appropriate to the problem being solved and the data structures used? Have they been implemented clearly and correctly?

- Changeability. How hard is it to make changes to the codebase? Is it modular? How difficult would it be to change an algorithm or data structure?

- Simplicity. Does the code avoid unnecessary complexity? Is it concise without being obfuscated?

From there we can include even more specific advice, such as avoiding commented out code (readability) and magic numbers (readability, changeability). One of the best references for identifying issues with code is Refactoring by Martin Fowler, which includes a list of common problems or code smells. A lot of this sort of advice has been embedded into tools such as PMD and FindBugs for Java, also most compilers will also issue warnings for common problems. However, whilst simply following all the advice given by such tools we will go a long way towards producing quality software, it is clear that merely following rules will not turn a bad architecture into a good one or a novice programmer into an expert.

To wrap up, it seems that the closest we can come to determining software quality is to take a list of quality attributes and ask a competent developer to assess a given code accordingly. Of course, this immediately leads to the nearly as elusive question of how do you identify a competent developer?

Further Reading or How to Improve Code Quality

Code Complete by Steve McConnell contains a lot a great advice on writing quality code. It also contains a discussion of software quality, which identifies the important attributes as maintainability, flexibility, portability, reusability, readability, testability and understandability.

Refactoring by Martin Fowler will help you recognise areas where you can improve things.

You also need to be aware of common design patterns. Head First Design Patterns provides a very readable introduction.

However, there is no substitute for simply learning from a good programmer, ideally by pair programming.