Open Data Science Conference UK 2016

Posted on 20 October 2016

Open Data Science Conference UK 2016

By Olivia Guest, Software Sustainability Institute Fellow

By Olivia Guest, Software Sustainability Institute Fellow

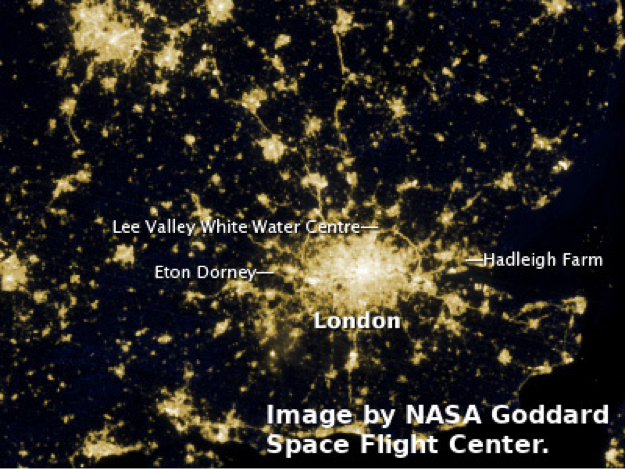

The Open Data Science Conference, ODSC, was held for the first time in London on October 8th and 9th. As far as I understand, it has its roots in the US and has only recently expanded to another continent. I’m not sure what I expected as I was still very much recovering from PyCon UK (yes, I’m a lightweight). However, I had noticed that quite a few talks were on packages I and/or colleagues use (e.g., TensorFlow, scikit-learn, etc.) so I was excited to see how and what they’re used for by others.

The first talk was delivered by Gaël Varoquaux, a core developer of scikit-learn, joblib, and other Python packages. He touched on a number of important issues. Firstly, he defined what a data scientist is as the combination of statistics and code, and, while I’m not completely convinced, I do notice the parallel between such a definition and my (although not uniquely my) definition of computer science—mathematics plus engineering. Secondly, he talked about hypothesis testing within computational sciences, applying a software architectural pattern know as Model–view–controller (MVC) to this process. He further explained his workflow when playing with data and testing hypotheses: starting out with a script, he then moves towards an increasingly more structured piece of code, until eventually the codebase turns into a library, which I suppose is how scikit-learn was born.

Additionally, he stressed the importance of using basic software engineering practises in appropriate amounts (i.e., cost-benefit between over- and under-engineering at each step). Finally, he talked about new features in scikit-learn and joblib. I must admit that I don’t use scikit-learn as much as other Python libraries are more suited to what I work on (e.g., TensorFlow, Seaborn, etc.); however, it was useful to be reminded of what is complementary and what is uniquely on offer. If you’re interested you can find his slides here.

ODSC has never been held in London previously, so it was a blank slate in many ways, and some of the impressions I have formed have been extremely positive! However, there were some issues in my experience. On the one hand, some of the attendees I interacted with were unprofessional, asking many irrelevant probing personal questions, outing other attendees, and pushing the limits of what are considered civil remarks and requests of others. While nothing too negative transpired, I’m a relatively seasoned conference goer so I’m aware these issues can make the atmosphere increasingly worse if left unchecked. I am certain the organisers and attendees would agree it is important to be inclusive and that things can easily be amended for the next ODSC in London. In my opinion, given the existence of a code of conduct, they might have benefitted from reminding us it exists in the opening remarks of each day and reiterating who people should talk to if any violations occur.

On the other hand, I met some great people and attended excellent talks. I even met a co-author on a paper we published that I had never met in person! I also was lucky enough for Page Piccinini of CogTales and #barbarplots fame to spot me and say “hello”. Over lunch, Gaël and I and others ended up discussing, amongst other things, some of the issues in science regarding questionable research practices, the replication crisis, and ReScience—a journal (hosted fully on github!) that Nicolas Rougier and others including myself work on. In the following paragraphs, I’ll outline two of my favourite talks.

Predictably, the talks I particularly enjoyed were those regarding the computational methods and/or libraries I use. Therefore, I really enjoyed the talk by Chris Fregly. His talk not only had the longest title (“Incremental, Online, Continuous, and Parallel Training and Serving of Spark ML and TensorFlow Models with Kafka, Docker, and Kubernetes”), but also was one of the most detailed talks at ODSC regarding the computational techniques he uses. Something I and the rest of the audience really appreciated, and something that I think all technical talks should do at every conference, especially the ones focussed on openness. Chris, using his pipelineIO, gave us a detailed account of how to use Docker and Kubernetes (amongst other tools) to create a recommendations pipeline powered by a stack of PANCAKEs. I really can’t do this talk full justice in a blog post, so please check out the various resources Chris has put together.

Another great TensorFlow-related talk was given by Yaz Santissi. This talk was exactly what I’d been looking forward to attend in a while, including but not limited to finding somebody who actually understands that some (Mac users—at least me and a friend I made in this talk) struggle with installing TensorFlow. We end up just SSH-ing into our Linux boxes (when we can) or just falling into a pit of despair (when we can’t). Yaz showed us how to install TensorFlow extremely effectively using Docker. Using just a few terminal commands we installed TensorFlow on a container, we downloaded some weights, we retrained them to do something specific to our needs, and ran the new weights on some new test inputs.

In this case, we retrained GoogLeNet Inception-v3 which has been trained to categorise photos into the 1000 synsets from ImageNet to instead categorise into 5 different types of flowers. This is called transfer learning as the basic building blocks the network has derived to categorise things into lions, tigers, cars, bicycles and so on can be repurposed to help the network learn the differences between unseen before flowers—dandelions, daisies, tulips, sunflowers, and roses. We then tested the network. Another audience member asked for it to try to classify a rose, and when Yaz asked what colour I cheekily said “black” – which is extra cheeky since black roses do not exist (tulipomania did indirectly inspire a book which inspired a grower to create black tulips though). The network, as I expected given my playing around with this network on my own for a paper, easily managed to classify the black rose as a rose. Notwithstanding, there are of course cases where it becomes confused, e.g., it thinks bouquets of tulips are roses. More training can likely improve this, although it never will be infallible. All in all, it was a really fun presentation and it has given me some new ideas to try out. Yaz’s slides can be found here.

A few more highlights were: the last talk I saw by Anoop Vasant Kumar, who gave a talk on a type of recurrent artificial neural networks called long short-term memory (LSTM) she uses to capture the behaviour of her data. Mango Solutions were giving out kitten toys as well as providing free R workshops, and I managed to get some very cool socks by deepsense.io.Videos of all talks will be available soon here. Overall, I had a great time although I do feel I need some time to recover and get back to normal after PyCon UK and ODSC UK. So I think I will take a few months break before I attend a tech conference again. Recharging batteries is as important as growing my skillset and networking. Besides, I think I’ve now amassed so many new friends and contacts that I can rest assured 2016 has been extremely productive.