It takes more than publications to recognise everyone in research

Posted on 19 February 2020

It takes more than publications to recognise everyone in research

By Simon Hettrick, Deputy Director, Software Sustainability Institute

By Simon Hettrick, Deputy Director, Software Sustainability Institute

A version of this blog post was originally published on Research Professional News

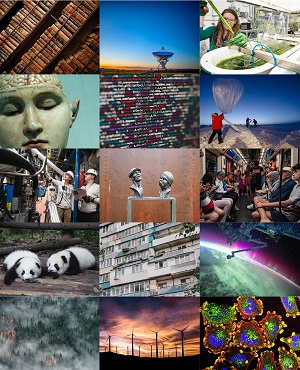

During the last REF, around 191,000 research outputs were submitted to showcase the best in UK research. They represented the work of 56,000 research staff in 154 institutions across 36 disciplinary areas. What was missing was any kind of variation in the outputs themselves: 97% were based on publications. Many of the skilled people who are vital to the conduct of research are not recognised in publications, which means they are also not recognised by the REF. This is why we launched the hidden REF, to celebrate all research outputs and the people who make them possible.

The research community has a myopic fixation on publications as the metric of research excellence - and it’s getting worse. The percentage of outputs based on publications has increased with each successive RAE and REF. I do not wish to denigrate the importance of publications. They are fundamental to the conduct of research and to academia’s contract with the public: taxes pay for research, publications disseminate knowledge. The problem arises when publications become the only measure of research success.

I first started thinking about this problem while investigating REF2014 to find representation for research software, which is my area of study. I expected to uncover a few thousand outputs in REF’s software category - but there were only 38. Around 70% of research is reliant on software, yet software is the basis for a mere 0.02% of REF outputs. This is disappointing for people like me who care about software’s role in reliable and reproducible research, but it’s a travesty for the people who create the software, because their expertise is being overlooked and undervalued. How can we expect to maintain a world-leading research environment if we ignore the vital contribution of large groups within the research community?

The REF itself is not at fault - at least for the focus on publications. After all, there was a software category in the last REF and there is in the next one. The problem is the risk averse nature of the committees responsible for choosing which outputs to submit. In the course of trying to write guidance for the next REF, I talked to a number of researchers and REF managers and the conclusion was always the same. University REF committees are under incredible pressure to maximise their return (and the funding their university will receive). They know publications and they’ve seen the reliance on them in previous REFs. Anything else looks risky.

It’s not just a problem with software. I work across a number of disciplines and have seen the same lack of recognition for technicians, data stewards, imaging scientists and medical statisticians. What about the disciplines I know nothing about? From the arts to zoology, you can bet there are many more of these important, yet hidden, research roles.

We will improve research if we recognise everyone’s contribution. I have seen this firsthand. In 2013, our organisation began a campaign for Research Software Engineers - the name we gave to people who develop software in academia. The campaign has achieved a number of successes, but possibly the greatest impact has been felt from the rise of “Research Software Engineering Groups” that are now hosted at 28 universities (with five new groups set up in 2019 alone). These groups make their expertise available to researchers within their institution, and that means thousands of researchers can now access people who understand both the research process and software engineering. This is a huge benefit to UK research and it occurred naturally once the importance of the Research Software Engineer was recognised.

The problem with supporting hidden roles is that you have to uncover them. That’s not easy in one’s own discipline, and it gets significantly more difficult if you attempt it across all of research. This is where the hidden REF comes in.

In the first phase of the competition, which runs up to July this year, anyone can suggest a category of research output that is currently unrecognised. There are no limitations to the outputs, so there are no limitations to the roles involved in their creation. Every month, we will announce the new categories and get feedback from the community about which should be adopted into the competition. The second phase starts in July when the newly minted categories will open for submissions. These will be judged by panels of experts drawn from the research community, and the winners will be announced in November 2020. The hidden REF is about raising awareness of unrecognised roles in research, it’s not about replicating the burden of the REF, so most categories are expected to require only a 300-word submission.

We have been fortunate to gain the support of a number of organisations, each of which supports communities of research professionals who have lacked recognition: BioimagingUK, the Data Curation Centre, the Imaging Scientist, the Society of Research Software Engineering, the Software Sustainability Institute, and the Technician Commitment. There is currently a skew in our supporters towards the sciences, but we hope this will change as word of the hidden REF gets out and new organisations join us.

It takes more than publications to recognise everyone's contribution to research. By raising awareness of the different outputs that are vital to research, I hope the hidden REF will lead to greater recognition of the variety of people we rely on to conduct research. Ultimately, we’re running this competition because we believe a fairer research environment is a more effective one.