| This blog is part of the Collaborations Workshop 2025 Speed Blog Series. Join in and have a go at speed blogging at Collaborations Workshop 2026. |

When working on research software, there will always be a balance between investing time in functionality, usability, and performance. Additionally, software used and developed in academic research environments presents several unique challenges. Oftentimes, researchers focus on functionality first and foremost, with little to no effort spent on the ease of use of their code, nor on the performance. This is understandable given the pressures researchers are under to publish. Grants are typically awarded for novel and innovative ideas, rather than for maintaining existing code. As a result, researchers often have little incentive to sustain or update their code after publishing the associated papers. They may also view their code as being in an unsuitable state for public release, further discouraging long-term maintenance. It is unrealistic to expect researchers to fully optimise their software’s performance while basic functionality is being developed. However, steps towards improved usability and performance can be undertaken from the earliest stages of development. Simple things, like comments and docstrings, can be introduced as the codebase is being developed. Moreover, API documentation can be autogenerated from the function docstrings. A simple README can be iteratively developed to include things such as the motivation behind the code, the installation instructions, and community guidelines. In addition, tests can further improve the usability and can be created from the start. Most research software is not created with the expectation that it will be passed on; instead, it’s developed with only the current project in sight. It’s important to recognise that these small improvements can greatly improve future maintenance and usability, both for the original author and any researcher who inherits the software.

Bare minimum documentation: benefits and barriers

Missing docs harm the ability of others to pick them up.

Is documentation the bare minimum for usability? Just docstrings and a good README can be sufficient. It doesn’t necessarily require a fully fledged user guide.

It can be argued that the bare minimum requirement for software to be considered usable is the presence of documentation. Research software often uses idiomatic workflows. A lack of instructions documenting exactly how the code should be installed and run can be a significant barrier for new users. Documentation doesn’t necessarily need to be a set of nicely formatted HTML docs – even the presence of a few docstrings, comments, and a README is often sufficient. However, the upkeep of documentation as a project develops presents a continuous challenge. Project maintainers should encourage each new piece of added functionality to include corresponding documentation.

A possible barrier to producing minimal documentation is a lack of confidence in the researcher that their code is worth the effort of making it understandable to others. Perhaps the researcher or PI needs to be convinced that their code is of interest to others outside their research group, or that their area of study has more general applicability (particularly in terms of programming or algorithms) than they may have first thought. A lot of the decisions about what documentation is required need to be made early on in the process of adding additional developers (e.g. RSE) to a project.

Unit tests can sit alongside the core code base as a means of demonstrating the functionality of the code. They improve not only the usability, but also the sustainability of the code. Unit tests give others the confidence to build on existing code, knowing that they haven’t inadvertently broken other parts of the code base. In addition, the presence of tests in a project facilitates profiling and improving performance. Integration and regression tests can provide self-contained snippets to run specific parts of the code in isolation, providing a standardised test suite against which the correctness and performance of the code base can be measured.

Bare minimum profiling: benefits and barriers

Profiling is an essential and inexpensive first step to understanding and improving research software performance.

Researchers who write code often lack formal programming training, which leads to bad habits that can greatly impact the performance of their code. As with documentation, profiling does not have to involve difficult tooling or in-depth analysis, but could consist of basic measures such as timing parts of the program. However, academic developers and users with little software experience or training may not recognise when performance is unexpectedly poor for a given problem. Many researchers will think of HPC or GPU compute when asked to improve the performance of their code, without having ever profiled their existing code to understand whether easily addressed bottlenecks exist. Consulting an RSE throughout the software development process, even with a short consultation, could help keep software both usable and performant.

Suggestions

One possible solution currently employed by the UCL Centre for Advanced Research Computing (ARC) is their ways-of-working agreement, which is detailed on their website. Before a project commences, ARC requires that their collaborating research groups understand and agree to facilitate the development of tests, that refactoring without the constant production of features is often essential, and that their code must be stored on GitHub or a similar remote git repository provider. This agreement helps ensure that collaborators allocate time for maintenance, which will ultimately benefit their software by increasing its usability and providing a convenient way for RSEs to prioritise documentation and testing.

Conclusion

Fundamentally, any research software should solve the problem it is intended for, i.e. it must be functional. However, an incalculable amount of time is spent rewriting functionally correct code into more performant and/or more usable and sustainable code.

When beginning any research software endeavour, these three factors must be given adequate consideration. Features, tools, or workflows to enable usability and the assessment of its performance should be implemented – at least to a minimum level – from the outset. Adding in documentation and unit tests to a fully functional but undocumented and untested code base can be an enormous task, and provides a huge barrier to the future usability and sustainability of the software.

There are many popular tools for making a developer’s life easier when it comes to writing documentation and assessing performance. Setting these up from the beginning of the project helps ensure sustained effort goes towards these throughout the project lifecycle.

If you’re a researcher who could benefit from learning more about documenting, testing, or profiling your code, reach out to your local Research Computing or RSE team. Many universities now offer training to upskill research staff and students in the skills required to produce robust and usable research software. If you are already familiar with these techniques, make sure to advocate for them with your collaborators who aren’t. Everyone benefits when research software is built to be used by others.

Authors | |

| Patrick J. Roddy | University College London, patrick.roddy@ucl.ac.uk, 0000-0002-6271-1700 |

| Robert Chisholm | University of Sheffield, robert.chisholm@sheffield.ac.uk, 0000-0003-3379-9042 |

| Liam Pattinson | University of York, liam.pattinson@york.ac.uk, 0000-0001-8604-6904 |

| Andrew Gait | University of Manchester, andrew.gait@manchester.ac.uk, 0000-0001-9349-1096 |

| Daniel Cummins | Imperial College London, daniel.cummins17@imperial.ac.uk, 0000-0003-2177-6521 |

| Jason Klebes | University of Edinburgh, jason.klebes@ed.ac.uk, 0000-0002-9166-7331 |

| Connor Aird | University College London, c.aird@ucl.ac.uk |

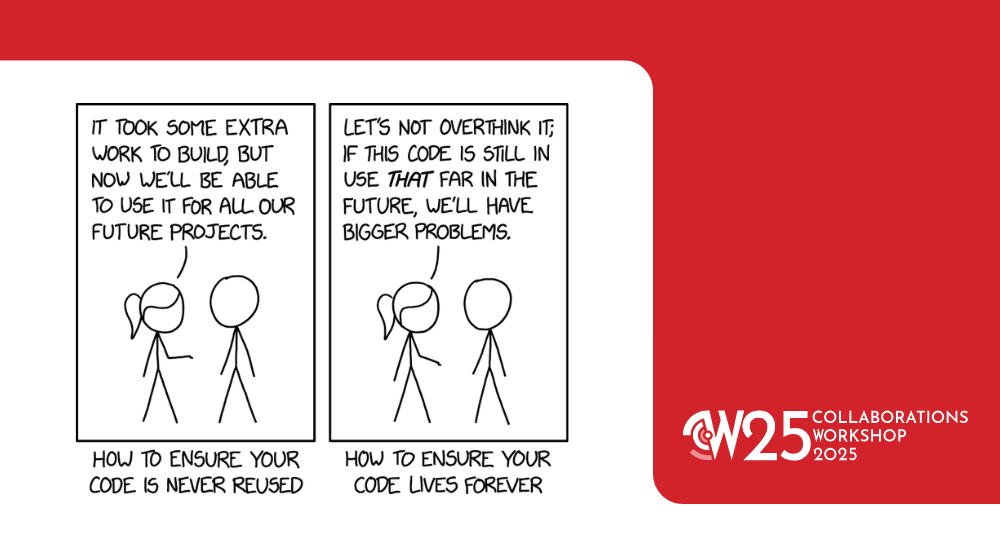

Original image from xkcd.com