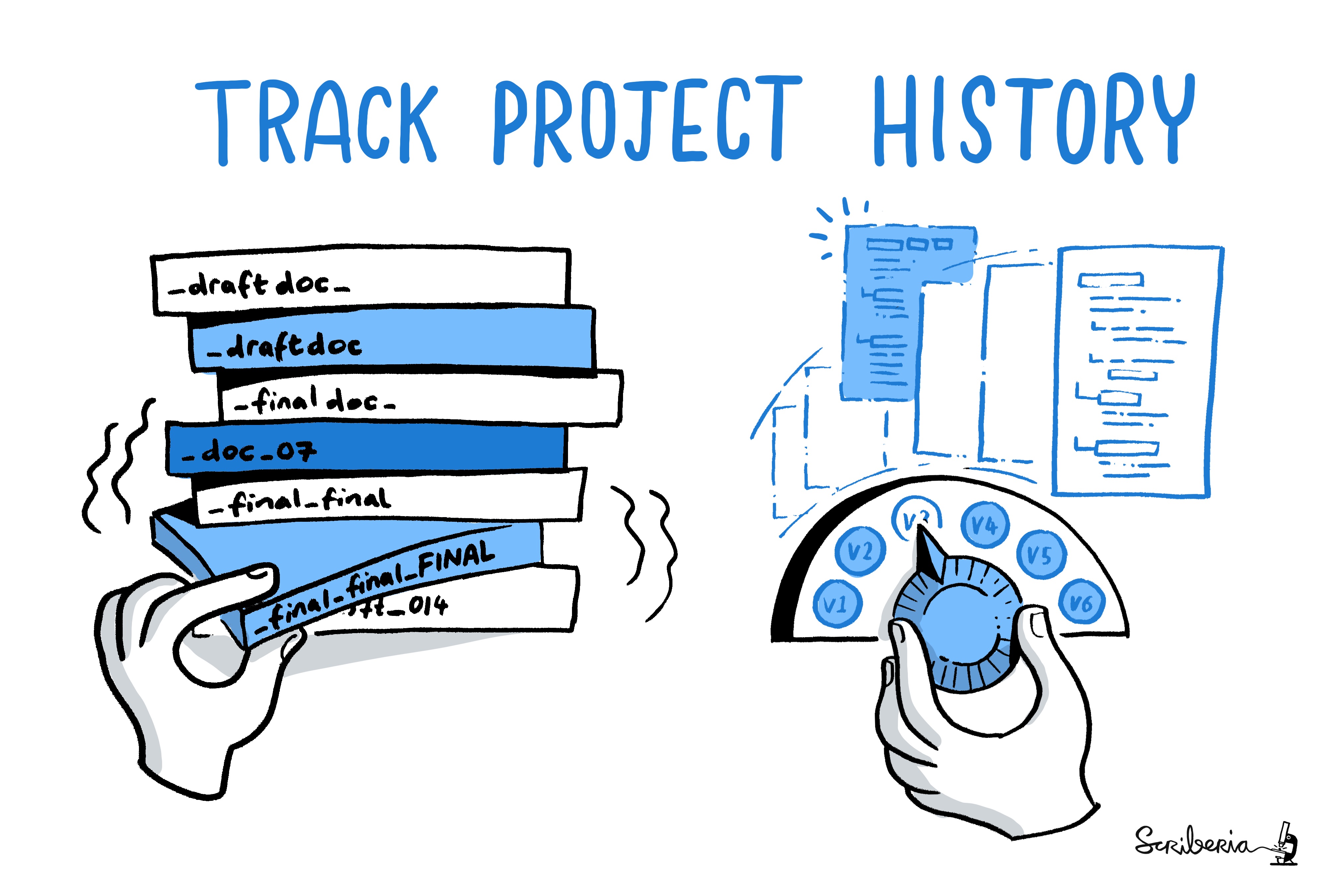

On the 22nd of May, the Imperial College London Research Software Engineering community hosted the first session of the Research Software Conversation series: Tools and Techniques for Modern Research in different domains. This event explored how version control helps to make research more reproducible.

The event opened with a short talk by Ms Hui Ling Wong — Presentation Final Final Final: Version Tracking with Git — outlining the challenges version control addresses, demonstrated its role in research reproducibility and productivity, and delivered a concise Hitchhiker’s Guide to Git for researchers. To complement her presentation, she also introduced a website she's developing to signpost researchers toward useful Git-related resources. This was followed by a round table discussion, facilitated by Dr Irufan Ahmed (Senior Research Software Engineer), with Dr Chris Cantwell (Reader in Computational Engineering), Prof. Sylvain Laizet (Professor in Computational Fluid Mechanics), Prof. Rafael Palacios (Professor in Computational Aeroelasticity), and Dr Alvaro Cea (Research Associate).

Photo by Saranjeet Bhogal

The discussion was frank, insightful, and occasionally humorous, unfolding in an engaging fireside chat atmosphere as panellists shared hard-earned lessons from decades of working with (and without) version control. A wide range of topics were explored, from the pivotal moments that drove adoption to unpacking how academic incentive structures often hinder and fail to promote the adoption of good software practices. Though time ran short, the conversation yielded rich insights. The following sections are highlights from the discussion.

Photo by Saranjeet Bhogal

Git Happens: The Journey to Version Control

In research, the multitude of demands placed on the researcher means that version control adoption is rarely driven by a commitment to best practices. Instead, it is forged in the fires of lost time, increasing frustrations with the status quo, and a deep-seated conviction that there must be a better way. Those experiences metamorphose into using version control as a vital tool for tackling the trials academia throws at them.

This journey was a resonant theme through the panel’s stories:

- Sylvain reached a turning point when the growing complexity made solving the problem untenable without version control and better software practices.

- Chris watched Nektar++ grow beyond being a departmental project. Dropping Subversion in favour of Git, creating the linchpin for empowering collaborators to work independently while safeguarding the code wayward PhD projects.

- Rafa’s aerospace industry experience – where he effectively became the version control system and endured the tedium of dealing with a folder-based system – left an indelible mark on him. When he returned to academia, it spurred him to learn Subversion and began challenging the prevailing mindset that treated PhD code as disposable, valuing only the resulting equations and theory.

Their collective dream is to sing an ode to the bygone days of tar balls of code and manually picking out changes. Underlying this sentiment was a core message from Hui Ling’s talk that had resonated amongst the panel: the code written and the practices adopted are not for present yourself, but for your future self.

When Best Practices Meet Publish-or-Perish

Employing version control and ensuring reproducibility are fundamental to high-quality research; they should serve as the benchmark for all research. However, the relentless push for publications and career milestones within academia’s incentive system often pushes these best practices out of sight. This view that the extraneous pressure placed upon a researcher by the machinery of academia works in direct opposition to them was succinctly summarised by a panellist in one word — “everything”.

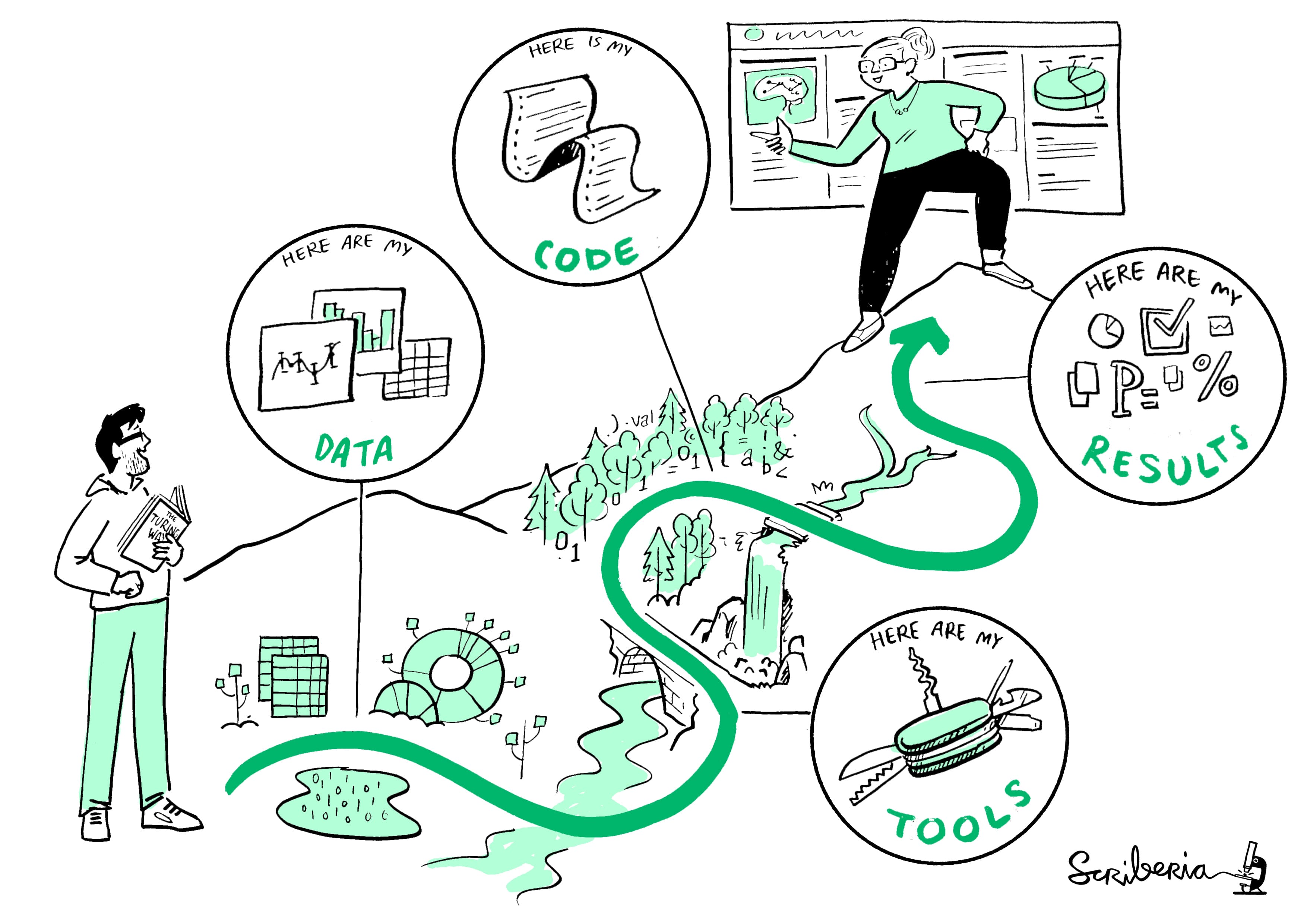

Throughout an academic career, researchers are initially judged on publication counts and citation impact, and later, their ability to secure sizeable grants. Reproducibility, however, rarely features among these yardsticks. This omission trickles down into the culture of a research group, where a computational PhD student can complete their studies without version control or submitting their code. Panellists agreed that only a top-down mandate from institutions, funding agencies, and journals has the potential to drive the lasting cultural shift needed to make reproducibility and good software practices standard.

Encouragingly, more journals are now asking authors to share the data sets and configuration files behind their papers and to make their code available. This requirement moves the field beyond simply showcasing impressive results to ensuring those findings can be independently verified and built upon.

Despite the gloomy outlook, the conversation had a levity, buoyed by a genuine sense of hope. At its core, the panel agreed that the real impetus behind the adoption of version control is the pursuit of research excellence. Tackling the most demanding (and often most rewarding) problems requires code that can handle significant complexity and can evolve. Version control and supporting tools become indispensable, with rigorous workflows embedded and safeguards into every stage of development. In other words, achieving the highest levels of insight and impact requires robust tooling and reproducibility baked into its core.

Shifting the Academic Glacier (with a Spoon and Optimism)

Currently, computational researchers wade into complex code without a formal induction. Experimentalists, by contrast, receive thorough safety briefings before they handle any equipment. Poor code and software practices may not maim researchers or cause an accidental explosion, but they can subject one to needless frustrations and waste invaluable time. To close this gap, we need to extend that same level of structured onboarding to every newcomer and support every member in our endeavour.

At a team level, newcomers should be inducted into version control and documentation best practices from the start. This will guard against preventable errors and future pains. Coupling this with a culture of using regular code reviews and the occasional pair programming sessions with a more senior individual on the team will help with onboarding overwhelmed newcomers. Moreover, it can dispel the initially daunting nature of software development.

At an institutional level, the introduction of Research Software Engineers (RSEs) has been a long-awaited game-changer. RSEs are the lab technicians of the computational research world – irreplaceable and capable of making all the difference. They deliver the dedicated support and expertise that experimentalists have long enjoyed but computational researchers have lacked. In Imperial’s Aeronautics Department, the RSE role is a recent yet already impactful addition. By introducing RSE roles in each department, we can transform reproducibility from an optional extra into the norm, while simultaneously improving productivity.

These actions can be complemented by debunking two insidious myths: that hosting code on GitHub is equivalent to open sourcing; and that learning Git is more intimidating than most assume. Moreover, it should be reinforced that version control alone will be the silver bullet. Every repository must have a robust test suite and is incorporated into continuous integration pipelines. Automated testing enforces quality, catches issues early, enables pinpointing where a bug is introduced, and gives researchers the confidence to fearlessly iterate rapidly.

Outside the research sphere, rethinking how programming is taught to undergraduates is the next (albeit idealist) big step. Currently, STEM undergraduates learn to program on toy problems. These do not adequately prepare one to deal with sprawling code bases that they will encounter in practice. Consequently, they should be trained to 1) break problems down into modular, decoupled, reusable components; 2) navigate and understand large code bases; and 3) apply design patterns and software architecture principles. The emphasis on usability and maintainability will not only cultivate researchers who naturally think in terms of clean code but also equip them with transferable skills that are valuable in any industry.

Ultimately, lasting change will demand more than individual good intentions. Universities, funding bodies, and journals must set clear mandates, provide infrastructure, and reward reproducible practices. Every workshop we run, policy we influence, and RSE we hire chips away at the ice. If we pick up our spoons and act with optimism, we can transform best practices into the academic standard we aspire to.