3D archaeology - now low-cost, high-volume and crowd-sourced

Posted on 23 July 2014

3D archaeology - now low-cost, high-volume and crowd-sourced

By Andrew Bevan, Senior Lecturer, UCL Institute of Archaeology.

By Andrew Bevan, Senior Lecturer, UCL Institute of Archaeology.

This article is part of our series: a day in the software life, in which we ask researchers from all disciplines to discuss the tools that make their research possible.

Archaeologists have long had a taste for computer-based methods, not least because of their need to organise large datasets of sites and finds, search for statistical patterns and map out the results geographically. Digital technologies have been important in fieldwork for at least two decades and increasingly important for sharing archaeology with a wider public online. However, the last decade of advances in computer vision now means that the future of archaeological recording – from whole landscapes of past human activity to archaeological sites to museum objects – is increasingly digital, 3D and citizen-led.

Structure-from-motion and multi-view stereo constitute a bundle of ‘computer vision’ methods (‘SfM’). They are a form of flexible photogrammetry (the latter being a science with a much older pedigree) in which software is able to automatically identify small features in a digital photograph and then match these across large sets of heavily-overlapping images in order to reconstruct the camera positions from which these photographs were taken.

This first step then allows for denser reconstructions of 3D coloured point clouds or photo-textured 3D mesh models. There are now some complete pipelines for such SfM techniques implemented in both open source, such as VisualSfM, Bundler and PMVS2, and commercial software packages like PhotoScan and Acute3D, as well as some cloud-based provisions such as 123D Catch and Photosynth.

A couple of years ago the first paper on SfM in archaeology was published and the range of applications has exploded since. A recent Nature article rightly characterising this as a ”quiet revolution” sweeping across academic disciplines such as archaeology, art history, architecture, palaeontology or taxonomic biology. SfM allows for the creation of high quality 3D models that are less costly than alternatives such as laser scanning. However, as a couple of examples below try to make clear, SfM really comes into its own by allowing large samples of objects to be modelled rather than just one or two, thereby allowing 3D morphometric comparisons, and democratising the 3D modelling process in ways that facilitate effective citizen science.

For example, we have recently been using SfM as part of a collaboration between UCL and the Museum of Emperor Qin Shihuang's Mausoleum that looks at the imperial logistics behind the construction of the mausoleum complex of China’s First Emperor. We have been studying both the bronze weapons held by the Terracotta Warriors and the warriors themselves for what insights might be offered by very fine-scale shape differences in these artefacts. Janice Li and Marcos Martinón-Torre blogged about the bronze weapons (with further references to published papers), but our most recent efforts have focused on the respective shapes of the warriors, with special attention to their ears!

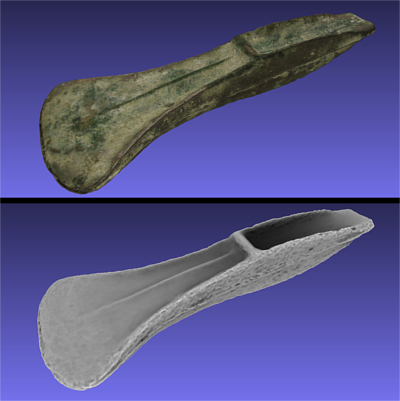

A 3D model of a Bronze Age axe, shown both with a photographic texture and with an ‘ambient occlusion’ surface (online version). Image by Andrew Bevan.

We were interested in several possible causes for differences in warrior features, ranging from the unconfirmed possibility that the statues might be modelled on real people to the possible signature-styles of workshop cells. However, our efforts so far have focused mainly on establishing the fact that we can capture 3D data about irregular features such as ears via SfM, and then subject it to meaningful 3D shape analysis (or 3D morphometrics). For the latter analysis, we are using an open source point cloud processing software called CloudCompare to finely co-register individual ear models and then estimate goodness-of-fit, with the R statistical environment then being used to build pair-wise comparisons into a distance matrix and further analyse it.

So wherever we wish to construct 3D classifications of physical shapes, SfM may prove useful. A further reason for its growing popularity is that it allows crowd-sourcing of good quality data collection. For example, we can ask members of the public visiting archaeological sites to capture and upload image sets for 3D models and thereby preserve a 3D snapshot of structures that unfortunately get damaged and decay through time. Two other good examples of this include Heritage Together and ACCORD, or Archaeology Community Co-Production of Research Data. We can also crowd-source smaller-scale 3D models of individual archaeological objects, and the MicroPasts project (a collaboration between UCL and the British Museum) is producing large numbers of such models, ranging from British prehistoric bronze axes to Egyptian shabtis (funerary figurines).

As with the terracotta warriors, the point of such an effort is to create not just one or two pretty models but a large sample whose 3D shapes can be compared statistically. MicroPasts’ crowd-sourcing involves a web platform built on the increasingly popular PyBossa task-scheduling and citizen science framework, and we enable a range of crowd-sourcing applications which also include online transcription. For the 3D modelling, museum staff take the artefact photos and then serve them via Amazon S3 to our platform where online contributors draw outlines around the objects they see in the photographs, as enabled via OpenLayers3. This then allows the SfM software to focus on modelling the objects rather than the image background.

In some cases, we ask up to three contributors to mask each photo so we can have a check on quality and then the resulting geoJSON datasets are downloaded and converted from vector coordinates into a binary raster mask via a Python script before the 3D modelling is conducted offline. These user-generated masks for each photo remain better than the object-background distinctions that we could generate automatically and allow us to build much cleaner 3D models. We then make the raw photos, masks and models available as open-licensed direct downloads, as well as visible online via WebGL. For an example, see this model of a bronze axehead and if you are interested in the practical workflow, our learning resources are online, with project software outputs also available on GitHub.

Main image: 3D models of the heads of two terracotta warriors (and their distinctive ear shapes).