Heroes of software engineering - the men and women at Transitive

Posted on 18 September 2013

Heroes of software engineering - the men and women at Transitive

By Ian Cottam, IT Services Research Lead, The University of Manchester.

By Ian Cottam, IT Services Research Lead, The University of Manchester.

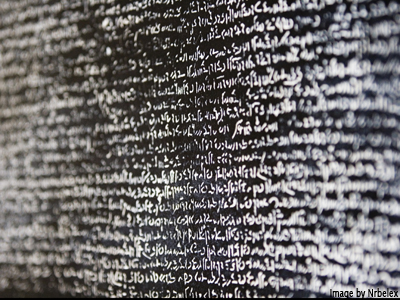

“Rosetta. The most amazing software you’ll never see.” Apple

Background and a disclaimer

The next post in my heroes of software engineering focuses on a company rather than an individual. Transitive was a spin-out company from my University (Manchester, UK) that started around October 2000. My story today is about how they helped Apple move to Intel x86 processors, from the PowerPC chip series, by working with Apple engineers to produce the dynamic binary translator that Apple called Rosetta. Transitive no longer exists – in 2008 its staff and offices were acquired by IBM – and, in a sense, neither does Rosetta (but more of that later).

Disclaimer: I worked at Transitive for just over a couple of years from its inception, but this is no self-aggrandisement piece as I had a senior management role, and can therefore exclude myself from the large set of heroes of this tale. (One could compare managers with Johnson’s lexicographers: “harmless drudges”. Some of the best drudges remove the crud that gets in the way of engineers, so that they can be creative and produce work of outstanding quality. And, without doubt, my heroes of today’s story did just that.)

This is also my first blog in this series were I don’t feel that I can name-check individual heroes, mainly because they are so numerous: around 40 software engineers during my time with the company, and closer to 100 later. I still find it fascinating that such a large group successfully produced quite a lean piece of software. (By the way, if members of the Transitive software engineering team would like a name-check, all you have to do is leave a comment on this article, using the facility at the end of the page.)

Dynamic Binary Translation

The technology of Dynamic Binary Translation is, perhaps, not so familiar: hence this short introductory section. Let’s start with binary translation. This means you have a program that takes a machine code executable binary for one processor family (say, PowerPC) and transforms it into an equivalent executable binary for another processor family (say, x86). If you managed to do such a translation all in one go, you would have a Static Binary Translator. Now, these are difficult to achieve and probably not as efficient as you would like (in terms of the speed of the resulting code). Static Binary Translators are hard to produce because programs contain computed goto’s. That is, you don’t know the destination address of a branch until run-time, and therefore what is code and what is data may be very hard to determine.

So, let’s bring on Dynamic Binary Translators. These are similar to just-in-time compilers (e.g. for Java byte code), they translate a basic block of machine code, cache the translated version, and execute it. If the next/destination basic block is not in the cache, repeat the above, otherwise jump to its cache location where it can be executed at full speed. A naïve version of such a translator might do no more, and simply hope most of the code ends up in the translation cache. (By the way a basic block is a sequence of machine code where the only transfer of control instruction is at the very end. Binary machine code programs are directed graphs with basic blocks as the nodes and the branches are the edges.)

Whenever you run the translator with different input data for the program under translation, you get a different, specialised result. This is key to getting efficient output code, because the translator has knowledge that a static compiler does not have. Consider a simple example. The original code calls a pre-compiled library/system routine that copies bytes from one location to another. Now, this code has to cope with the general case where you might be copying a million bytes, but a dynamic translator can spot that you are, in one case, say, only copying a 100 bytes. Hence it can choose to unroll the copy loop, inline it and create a potential speed boost over the original code. (Note that we have increased the size of the output though as it is likely that the copy routine is called from many points in the original with different input data.)

Dynamic Binary Translators rely, to some extent, on the fact that - post-initialisation - many programs have critical inner loops. Good translators can detect these and re-generate the code for the set of basic blocks that make up the inner loops, which optimises the hell out of them (this of course takes a little longer, so you don’t want to do it all the time).

Rosetta

(There are no trade secrets below; everything can be found on the web, and I have given a sample set of such URLs at the end of this blog.)

There had been previous attempts to translate PowerPC machine code to Intel x86. The ones I know about were at least two orders of magnitude too slow. PowerPC chips have lots of general purpose registers, x86 just a few. Since one critical way to get speed is to keep all the variables that are needed for a calculation in registers, a translation in the many-to-few direction can be daunting. And there are many other challenges, too numerous to mention here.

How then did the Transitive software engineers achieve astonishing figures like 50-70% of native speed? I have written before that many users of Rosetta were fooled into thinking that it is a simple program, perhaps because it is not huge, has no user interface and works quickly. Believe me, under the hood, it is a complex beast. It is based on Transitive’s generic architecture (which was called QuickTransit) of front-end, kernel and back-end. The complex kernel actually knows nothing about PowerPC code. The front-end translates – that is, disassembles – a basic block into a common intermediate language (more accurately an intermediate representation, as this language is never seen by human eyes, but it is interesting to think of it as an abstract machine level language). The back-end generates the final x86 code. The kernel contains every bit of “compiler optimisation trick” known to woman and man – and there are a lot of such tricks. Clearly, one of the key magic engineering ingredients is deciding, at run time, when to apply each optimisation, as they take both time and space. If a piece of machine code is only going to be executed once or twice, the translation time becomes the dominating factor.

Trivial but important is a relatively simple Unix facility that made it easy to hide Rosetta completely from the user. Under Unix (the basic level software that Apple’s OS X is built on) you can associate certain file types with an interpreter, such that when you double click the file the interpreter is run with the file as argument. This is what is happening when you double click on a text file that contains a Shell script: the appropriate Shell is run. For PowerPC code files, the Transitive engineers simply associated such files with Rosetta. This led to many people not realizing dynamic binary translation was happening, hence Apple’s tag line: The most amazing software you’ll never see. (Later, this tag line would become somewhat ironic.)

My own experiences as a user of Rosetta, when I bought my first Intel based Mac, were typified by my use of Microsoft Office 2004. Provided you had lots of memory, it ran incredibly well under Rosetta’s dynamic translation. When native x86 Office 2008 first came out its performance was worse, and I (and others) quickly switched back. Of course, over time, later releases of native x86 Office improved and eventually it became worth the upgrade

Rosetta still has impact in 2013

Five years from its release, Apple judged that Rosetta was no longer needed when OS X Lion (10.7) was introduced, and did not supply it. You couldn’t just copy it back in because it needed all the numerous system libraries to be in PowerPC code as well as x86, and Apple did not provide these. As far as I can judge – and please correct me if I am wrong – Apple failed to announce this officially, although it was on every rumour and tech support website (but, of course, these are not read by the man on the Clapham omnibus). And Apple’s Rosetta tag line became ironic. (I did manage to find it is still featured on their Asia site.)

Thanks to Apple and Transitive, the Rosetta and Snow Leopard combination was so good that many articles appeared saying “just try to pry this from my cold dead hands”, and many people keep a copy of OS X Snow Leopard (10.6) just to run their old programs, perhaps under virtualisation or on older hardware. Some users at my University take this attitude: we estimate that as many as 20% of our Mac using population are still on Snow Leopard. This is actually a major pain, because we need to support three generations of OS X (and soon, when Mavericks is released, four). Given that Apple changed quite a bit of their networking code in 10.7 Lion, we often have to give two sets of instructions for how to connect to, for example, Windows servers.

Still, that is not the fault of the heroic crew of Transitive software engineers that made Rosetta such a resounding achievement.

Further reading

- Experience and Good Taste in Software/Systems Design

- Snow Leopard users: Just try to pry this from my cold, dead hands

- Office not working on Lion?

- How one of Apple’s most important pieces of software came from a small UK startup

- Transitive supplying Apple with foundation for Rosetta technology

- The brains behind Apple's Rosetta: Transitive

- Manchester University made Intel Mac possible

- Rosetta, Apple Asia