The Recomputation Manifesto

By Ian Gent, Professor of Computer Science, University of St Andrews.

By Ian Gent, Professor of Computer Science, University of St Andrews.

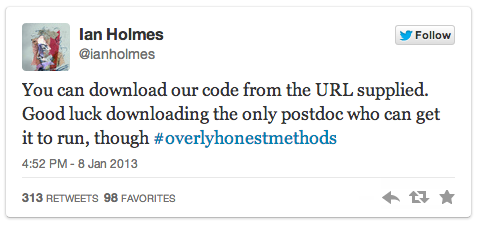

At the start of this year there was a wonderful stream of tweets with the hashtag #overlyhonestmethods. Many scientists posted the kind of methods descriptions which are true, but would never appear in a paper. My favourite is this one from Ian Holmes.

Although every scientific primer says that replication of scientific experiments is key, to quote this tweet, you'll need luck if you wish to replicate experiments in computational science. There has been significant pressure for scientists to make their code open, but this is not enough. Even if I hired the only postdoc who can get the code to work, she might have forgotten the exact details of how an experiment was run. Or she might not know about a critical dependency on an obsolete version of a library.

The current state of experimental reproducibility in computer science is lamentable. The result is inevitable: experimental results enter the literature which are just wrong. I don’t mean that the results don’t generalise. I mean that an algorithm which was claimed to do something just does not do that thing: for example, if the original implementation was bugged and was in fact a different algorithm. I suspect this problem is common, and I know for certain that it has happened. Here’s an example from my own research area, discovered by my friend and tenacious pursuer of replication Patrick Prosser.

How it should be: the Recomputation Manifesto

I’ve written the The Recomputation Manifesto, which describes how I think things should be (the full text is available at arxiv). I’m now going to talk about the six points of the manifesto, and say a bit more about the last two points, because they often prompt discussion.

- Computational experiments should be recomputable for all time

- Recomputation of recomputable experiments should be very easy

- It should be easier to make experiments recomputable than not to

- Tools and repositories can help recomputation become standard

- The only way to ensure recomputability is to provide virtual machines

- Runtime performance is a secondary issue

1. Computational experiments should be recomputable for all time

A quick word about the word. I’m using the word recomputation to mean exact or replication of a computational experiment. I wanted to avoid a name clash with replication. Recomputation isn’t a neologism - the word predates the USA - but it is adding a nuance.

But should experiments be recomputable for all time? I think so. I don’t see any sensible notion of a useful life beyond which experiments can be discarded. Imagine if physicists could keep Galileo’s telescopes for almost nothing, but couldn’t be bothered. Computer storage gets exponentially cheaper over the time, so the cost of storing for a few years is almost the same as storing forever. There are issues to do with changing machines, but people are on it.

2. Recomputation of recomputable experiments should be very easy.

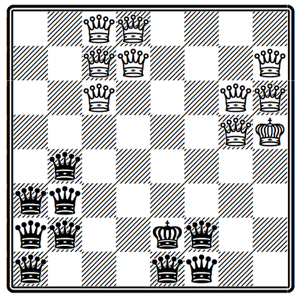

The next paragraph contains a claim about a chess position. It’s based on an experiment I ran. Anyone in the world can check that the experiment does what I say. The instructions for rerunning it are a few lines.

The illustrated position contains the king and all nine possible queens of each colour, i.e. the original and eight promoted pawns. No queen is on the same row, column or diagonal as any piece of the opposite colour. The illustrated position is the only possible chess position for which the description of the previous paragraph is true, excepting rotations and reflections of the chessboard, or swapping black and white.

This experiment is available at http://recomputation.org/cp2013/experiment1.html. After downloading the experiment box and booting, it takes only about 1 minute to run.

It’s not true that I can make available an experiment which proves my scientific claims are true. My code could be wrong, or the libraries I’m using (the excellent Gecode constraint library) could be bugged. But it is true that I can make available an experiment which allows any scientist in the world - or anyone else - the chance to recompute my experiment to make sure its results are as I said. And it is true that anyone can look inside my experiment to see if they can spot any mistakes, or use any good aspects of my experimental setup in their own experiments. The experiment also contains the source code and all scripts necessary to run it.

By the way, to confirm that the instructions for rerunning are brief, here they are in toto in a Linux/Mac environment. Before running it you need two free tools, Vagrant and Virtual Box. Open a terminal and…

mkdir anydir

cd anydir

vagrant init experiment1 http://recomputation.org/cp2013/experiment1/recomputation-QueensPuzzle-b.box

vagrant up

In a few minutes the experiment should have run and the results should be in anydir. The only thing I omitted are the instructions to free up the resources used at the end: vagrant destroy and then vagrant box remove experiment1 virtualbox, since you ask.

An aside: while it looks like I am blowing my own trumpet by giving you an example of an experiment, this is not how I have normally done things. Probably none of my own scientific experiments are recomputable. The example shows how things should be, not the way I have done them in the past. If you want me to blow somebody’s trumpet, I choose C. Titus Brown, who has made available a virtual machine (VM) with experiments for a serious paper instead of a toy chess puzzle.

3. It should be easier to make experiments recomputable than not to

There’s a technical sense in which this can never be true: just don’t do the extra step to make your experiment recomputable. So this statement needs a little justification. What I really believe is that we can make it very easy to make experiments recomputable, and that the benefits will be significant. Not so much the benefit of feeling good that an experiment is repeatable, but the benefit of being able to rerun experiments for final copy of a paper instead of not being able to. Or being able to rerun them with new data, or a new experiment like the old one… all made easy by ensuring that your old experiments are recomputable.

4. Tools and repositories can help recomputation become standard

There are already a number of tools out there, and a smaller number of repositories. Among the new repositories springing up is one that I started at recomputation.org. The particular focus of this repository will be scientific experiments, and trying to make them recomputable for all time (or as long as we can). I’ve started a list of resources which points you at some interesting tools, repositories, and other stuff.

5. The only way to ensure recomputability is to provide virtual machines

The reason that we need to store virtual machines with experiments in them is that nothing else can guarantee to get the original experiment to run. Code you make available today can be built with only minor pain by many people on current computers, but that is unlikely to be true in five years, and hardly credible in twenty. So the best - and I think only realistic - way to ensure recomputability is to provide virtual machines. I don’t think this claim is either novel or controversial. Bill Howe has made the case in detail, and very recently David Flanders made a similar case.

There is a problem with virtual machines: they are big. The VM for the experiment above is almost 400MB, which makes downloading and uploading the real problem. As well as being cheap, disk space is scalable (because I can buy more disks), but download speed limits how many VMs somebody can download and run. Upload speeds limit how fast they can give us VMs, and some experiments might need hundreds of gigabytes of data.

I hate it when people say “There’s no problem” and then say “because you can do X”. What they mean is: ”Yes, there’s a problem and you can do X as a workaround.” In which case... yes there’s a problem with download speeds, but I think there are workarounds.

We are currently working on making experiments for the conference CP2013 recomputable (we means Lars Kotthoff and I). We’ll be running a tutorial at the conference on recomputation. We are not asking people to send us VMs, but to send us what they think we need to get the experiment to run. This is usually a few megabytes of code and maybe executables. Assuming we can run it in a standard environment, we can make our own VM without one ever being sent to us. We can also provide zipped versions of the experiment directory for people to download. If they have the right environment they can run the experiment too. So the huge up- or download is not always necessary. But we still have to create and store the full VM, because we can’t know what trivial change to the environment might stop the experiment working, or (worse) make it appear to work but actually have a major change in it.

6. Runtime performance is a secondary issue

This is another point that causes a lot of discussion. Many scientific experiments in computing seek to show that one method is faster than another method. But my manifesto says that this is a secondary issue, which can - understandably - produce incredulity. Yes there’s a real problem. Run time performance is often critical and normally can’t be reproduced in a VM, but instead of offering workarounds I suggest these two thoughts.

First is the obvious point. If I can’t run your experiment at all, then I can’t reproduce your times. So recomputation is the sine qua non of reproducing runtimes.

Second is a less obvious one. The more I think about it, the less I think there is a meaningful definition of the one true run time. I have put significant effort into making sure that runtimes are consistent but, however we do this, it makes our experiments less realistic. With rare exceptions (perhaps some safety critical systems) our algorithms will not be used in highly controlled situations, moderated to reduce variability between runs or to maximise speed of this particular process. Algorithms will be run as part of a larger system, in a computer sometimes with many other processes and sometimes with few and, because of the way that computing has developed, sometimes in raw silicon and sometimes in virtual machines. So the more I think about it, the more I think that what matters is the distribution of run times. For this, your experiment on your hardware is important, but so are future recomputations on a wide variety of VMs running on a variety of different hardware.

So my claim remains: runtime performance is secondary to the crux of recomputation. And if you make your experiments recomputable, maybe over time we will get a better understanding how your performance is affected by the underlying real or virtualised hardware .

Recomputation.org and software sustainability

I’ve mentioned the website recomputation.org, intended to be a repository of recomputable experiments for all time. Our slogan is “If we can compute your experiment now, anyone can recompute it twenty years from now”. Twenty years - never mind all time - is an ambitious target, especially for a repository which now holds one experiment (the chess puzzle). We are ambitious, and unashamedly so. We want to change the way computer science is done. We might not make it. But it is better to try and fail, than not to try. Computer science can be better, and one way is by those of us who care putting effort into making experiments recomputable and keeping them that way.

I look forward to working with the Software Sustainability Institute and I think the interest crosses both ways. There may be things that the Institute do that we can help with, and of course there are many many things that Institute can do that will help us. Apart from anything else, a critical point is to ensure the sustainability of our own software and systems over the long term.

Oh, and by the way, it’s not just software sustainability I’m looking forward to working with. If something I’ve said sparks your interest - or your disagreement - I’d love to hear from you and maybe work with you to make computing more recomputable in the future.

About the Author

Ian Gent is Professor of Computer Science at the University of St Andrews, Scotland. Of his non peer-reviewed papers, his most cited by far is How Not To Do It, a collection of embarrassing mistakes he and colleagues have made in computational experiments. To show how good we are at not doing things right, we even mis-spelt the name of one of the authors: it’s Ewan, not Ewen MacIntyre!