Everyone has read at least one paper lately that started “Machine learning has made an impact in the entire field”.

Doesn’t matter which field.

Then ChatGPT happened, and even those peddling crypto and web3 suddenly talked about AI.

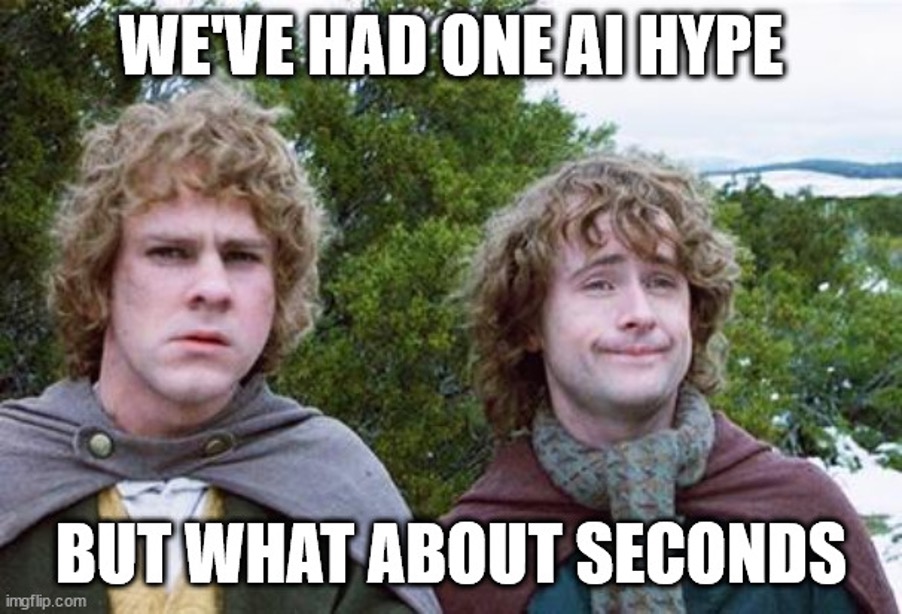

We've got AI hype on top of our AI hype.

But with that, a few conversations became disproportionately harder.

The Emergence of Alignment

It happened when chatGPT was released.

People, including myself, couldn’t believe how good this type of model had become. The texts were fluent. Depending on your instructions, there was what seemed like coherent thought.

It seemed a bit like magic, or maybe even gifted with consciousness to some.

Machine learning papers had already been published without peer review at a break-neck pace before chatGPT was released. Some models, like the popular Yolo models, were even released completely without formal publication.

So, publishing claims that chatGPT showed sparks of Artificial General Intelligence, without peer review and as quickly as possible, fit into the culture of machine learning research.

It’s a compelling narrative.

What if we created an intelligence? Wouldn’t that at least be cool?

But quickly, questions emerged about the long-term safety of humanity. If we created superhuman intelligence, how do we ensure it doesn’t turn against us? How do we ensure AI is aligned with humanity's goals or even survival?

The idea of AI alignment was born.

The sudden interest in Long-term Ethics

Suddenly companies with big well-paid AI teams, especially those that recently fired their entire ethics teams, were talking about the long-term view.

AI alignment was everywhere.

These companies were even pushing for regulation of these AIs and future development of AIs. Some researchers started comparing themselves to Oppenheimer. Quite the emotive comparison. Let’s ensure the long-term survival of humanity by regulating the alignment of AI development.

Yet behind the scenes, those same companies pushed for de-regulation behind closed doors in the EU, where the AI Act would have wide implications.

It’s compelling, though. We all want to survive. We’re all a bit scared of the long-term effects of this un-known entity. It’s the Siren call. Or it’s a good set-up for a trick.

The Magic Trick

From street magic to David Copperfield, magicians all work with one simple trick.

Attention.

A magician will direct your attention to the hand they don’t want you to look at. Then they can perform the sleight of hand with the other, non-observed hand.

When we get enough people to pay attention to the long-term harms and be satisfied with the regulation of long-term goals, we can divert the attention from short-term impacts.

Not too dissimilar to other industries that have spent resources to divert our attention from lung cancer and climate change.

What are we missing in the Short Term?

In the short term, we know machine learning can already do harm.

From surveillance to increased premiums. Rejected loan applications that can’t be explained by random blips in The Algorithm. Biases in machine learning models and algorithmic decision processes are well-documented.

And then there’s, of course, the CO2 footprint of AI model training, which will be an actual proven problem in the future. We don’t have to speculate in this case.

These biases and discriminatory correlations can be present in the training data, exacerbated through exploratory data analysis and then reinforced by humans through automation bias. And then there is, of course, the loss of privacy through widespread data collection practices.

Something that already affects us every day and could be addressed by data cleaning practices and regulations. (Coincidentally, why Google Bard is not available in the EU right now.)

Scientists using Machine Learning

As researchers, this goes even deeper.

The use of machine learning in different fields of science has been proven extremely potent. However, many basic tutorials don’t adequately reflect the realities of real-world data.

It can be computer vision models that recognise the hospital code of places that treat patients that were advanced cases, therefore cheating through this shortcut. Or it could even be researchers training on the test data to improve the score, beat the state-of-the-art and be able to publish. There are accidents and questionable behaviours that can be hidden in machine learning scores.

These publications can chart the entire trajectory of a field, like we unfortunately recently discovered with fabricated data in Alzheimer’s research.

We have a responsibility for AI safety, even if it’s just our tiny little field.

Why I created ML.Recipes

I wrote the resources ML.recipes to empower scientists to quickly learn about best practices.

The Jupyter book has code examples everyone can easily re-use and explanations throughout the entire book as to how to work with this information and the benefits of each section.

These are generally divided into “ease review” to make it easy for others to gauge the quality of a machine learning contribution, “increase citations” to be able to verify and re-use your code and model, and “foster collaboration” which is about standards and share-ability of your science.

Let the billionaires and effective altruists discuss AI alignment while we build useful tools and insights to advance science and humanity. Tools that are safe and science that is valid.

Large language models may not be our end just yet.